ThoughtRiver’s latest technology will shake up the legal AI industry with unprecedented accuracy levels.

At ThoughtRiver we like to let our software platform speak for itself. It’s just one reason why, during their evaluation phase, we offer our clients a trial – so they can discover the power of ThoughtRiver for themselves.

We have found that once our customers, partners, and users start using ThoughtRiver, they like what they see.

“ThoughtRiver gives me and my team confidence that we are always reviewing a contract against policy, it’s like a second pair of eyes, a second opinion to make sure we don’t miss anything.”

Ronald Yeo, Legal Contracts Manager, Singtel

There’s a good reason why we’re trusted by companies such as Deloitte, Foley & Lardner, DB Schenker, and Shoosmiths. They’re happy to acknowledge the difference our contract acceleration platform makes to their operations. Our testimonials and case studies speak for themselves.

But we’re on a mission to make all contracts easy to understand for everyone and that makes us a restless bunch. Great as the product was in 2020, for us to help everyone understand all contracts we felt we needed to take it to a new level. What we needed was even more accuracy and even less time to accuracy.

Precision matters

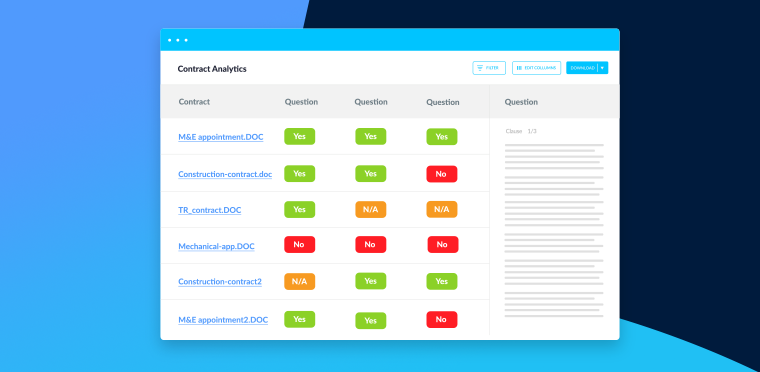

Our Lexible Q&A engine is designed to ask the same human language questions of your contracts as your own lawyers would. ThoughtRiver then reviews each and every contract to the same high level, at pace, and on a consistent basis, identifying risk with a safety-first approach. Safety-first was a conscious design decision.

Just as your lawyers are trained to play it safe, so Lexible has been configured with a slight bias towards ‘false positives’ and flag something as a risk when the AI is unsure – alerting a human lawyer to run a quick review in order to remove any doubt. After all, it’s far safer to produce false positives and manually check that there is in fact no risk, than fail to identify the risk at all.

This is what data scientists call a Recall bias - prioritising finding everything you should and worrying less about hoovering up a few incorrect matches in the process. The flipside of hungry Recall is frugal Precision. A bias to Precision prioritises only finding items that are correct, and worries less about missing matches. Typically these are trade-offs, a little like a see-saw; higher recall results in lower precision and vice versa.

AI designed for legal due diligence tends to have a large recall bias. These platforms may dip precision levels to 60% or less in order to ensure nothing is missed. For the user, this may mean that more than half of the provisions surfaced in a search are irrelevant, but this might be ok in a due diligence scenario.

In ThoughtRiver’s case, we automatically produce a first pass risk review of contracts in negotiation (a pure AI process - there is no human in the loop); for this, such low levels of precision would be unthinkable - and the output would be unusable because we would be flagging a huge number of irrelevant risks. Our users need high precision as well as high recall, hence why we could only afford a small recall bias. And even at this level the bias might be a blight on the user experience.

So we challenged ourselves; could we materially remove the bias altogether without reducing overall accuracy?

The AI vs human paradox

AI delivers predictions based on its understanding of the world around it, and the training examples that it has been trained on. But it is always a prediction, and as such will never be correct 100% of the time – just like you are not right 100% of the time.

Tests prove that legal AI is more accurate than a typical human lawyer performing the same exercise - our previous Lexible technology has similarly performed better than humans in person vs machine tests (90%+ vs 87%) and around 10-20x faster.

But we had observed through thousands of interactions that many lawyers require AI to significantly outperform humans as the price for adoption - not only faster but way more accurate. This might seem odd when AI is a tool to augment not replace humans but this expectation is at the root of all technology disruption. Washing machines took off because they are both faster and much better than washing clothes in a sink, calculators caught on because they not only do the sums in milliseconds, there's never a mistake (and no brain ache).

So the challenge got harder still. Being a bit better is not enough to deliver the next stage of our mission; not only did Lexible need to balance accuracy, it needed to boost both elements to a level far beyond human accuracy and way better than anything on the market in 2020 (and indeed 2022). And still be 10-20x faster.

Anyone who has worked with experimental data science will know the frustrations - for every hypothesis that works to a modest extent, there's 50 that do not at all. It took 2 years, a lot of coffee, many wrong turns, a ton of arguments and many more late nights to arrive at a solution design that seemed irresistible.

Finally, we saw the first results in April 2022 for the new Lexible prototype. It still needed work, but the data were unequivocal. Even we were stunned by the results.

The prototype combines with the unique Lexible human Q&A framework with next generation Natural Language Processing technology to deliver incredible power. We have called it Lexible FusionTM.

The results

Lexible Fusion is not a small step in a product release cycle. It is a huge leap for an accuracy-hungry legal industry.

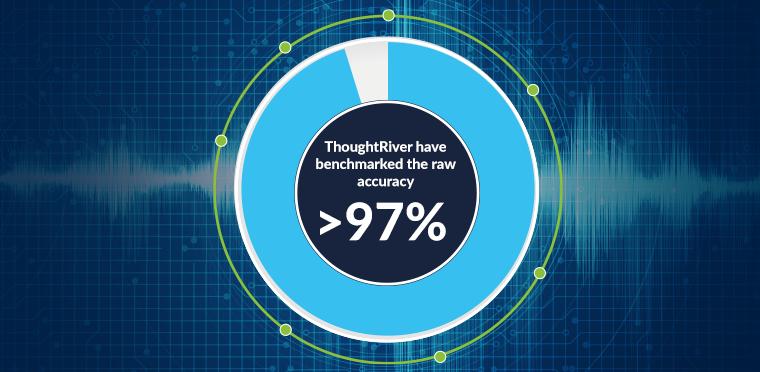

Early analysis on 100s of thousands of test cases reveals accuracy levels not seen before. This analysis includes a benchmark of risk assessment accuracy to 97%. Given 100 risks occurring in a third party contract the platform has never seen before, ThoughtRiver will correctly identify, assess and describe 97 of them.

We’ll be releasing more details in the next few weeks. Look out for further information that will explain exactly what has changed, and what you can expect from ThoughtRiver’s revolutionary Lexible FusionTM engine.